Date: 30th of June 2025

Conference Website: International Conference on Intelligent Autonomous Systems 2025

Time: 9AM - 5:30PM

Location: Genova Blue District Genoa, Italy - Cuspide A and on ZOOM

Program

| Time (GMT+2) | Activity |

|---|---|

| 09:00 | Ice-breaking activity |

| 09:30 | Keynote Talk - Silvia Rossi |

| 10:00 | Lightning Talk - Isabel Neto |

| 10:30 | Coffee Break |

| 11:00 | Keynote Talk - Oliver Bendel |

| 11:30 | (G)ROUND Table |

| 12:30 | Lunch NOT PROVIDED |

| 14:00 | Short Paper Presentations |

| 14:40 | Tutorial - Lorenzo Ferrini |

| 16:00 | Coffee Break |

| 16:25 | Demos for the General Public |

For any inquiry, please contact us at workshop.ground@gmail.com.

Keynote Speakers

Oliver Bendel

Professor, FHNW University of Applied Sciences and Arts Northwestern Switzerland

Robots, chatbots, and voice assistants in the classroom

Chatbots, voice assistants, and robots – both programmable machines and social robots – have been used in learning for decades. Prof. Dr. Oliver Bendel from the FHNW School of Business in Switzerland presents his own projects from 15 years. Some of his chatbots and voice assistants, such as GOODBOT, BESTBOT, and SPACE THEA, recognized user problems and responded appropriately. They showed empathy and emotion. Pepper was used as an educational application for children with diabetes, and Alpha Mini as an educational application for elementary schools. Chatbots for dead, endangered, and extinct languages such as @ve, @llegra and kAIxo can be integrated into learning environments for all ages. Today, the technology philosopher and information systems expert mainly uses GPTs such as Social Robotics Girl and Digital Ethics Girl in his courses. They can receive and answer questions from several students at the same time, even if they are asked in different languages. They are specialists in their field thanks to prompt engineering and retrieval-augmented generation (RAG). In his talk, Oliver Bendel will ask how chatbots, voice assistants, and social robots will be designed as adaptive systems for multi-user settings in the future. These capabilities are especially important in the classroom.

Chatbots, voice assistants, and robots – both programmable machines and social robots – have been used in learning for decades. Prof. Dr. Oliver Bendel from the FHNW School of Business in Switzerland presents his own projects from 15 years. Some of his chatbots and voice assistants, such as GOODBOT, BESTBOT, and SPACE THEA, recognized user problems and responded appropriately. They showed empathy and emotion. Pepper was used as an educational application for children with diabetes, and Alpha Mini as an educational application for elementary schools. Chatbots for dead, endangered, and extinct languages such as @ve, @llegra and kAIxo can be integrated into learning environments for all ages. Today, the technology philosopher and information systems expert mainly uses GPTs such as Social Robotics Girl and Digital Ethics Girl in his courses. They can receive and answer questions from several students at the same time, even if they are asked in different languages. They are specialists in their field thanks to prompt engineering and retrieval-augmented generation (RAG). In his talk, Oliver Bendel will ask how chatbots, voice assistants, and social robots will be designed as adaptive systems for multi-user settings in the future. These capabilities are especially important in the classroom.

Silvia Rossi

Professor, University of Naples

Personalization and Context-awareness in Service Robotics: from Individuals to Groups

This talk presents our approach to enabling robotic systems to engage users in context-aware, personalized interactions through multimodal communication. In service robotics, the ability to deliver natural and adaptive interactions is key to fostering user engagement, trust, and long-term acceptance. We explore how robots can interpret multimodal human cues—such as gestures, facial expressions, and speech—to infer users’ intentions, social and situational contexts, and likely future actions. This understanding enables robots to provide personalized assistance through actions like monitoring, coaching, and motivation, while adapting both verbal and non-verbal behaviors to individual users. We also address the challenges posed by multi-user scenarios, where robots must assess group dynamics and manage engagement across multiple parties in both public and private environments. The talk will highlight our findings and discuss the technical and interactional challenges of extending personalization to multi-party settings in Human-Robot Interaction.

This talk presents our approach to enabling robotic systems to engage users in context-aware, personalized interactions through multimodal communication. In service robotics, the ability to deliver natural and adaptive interactions is key to fostering user engagement, trust, and long-term acceptance. We explore how robots can interpret multimodal human cues—such as gestures, facial expressions, and speech—to infer users’ intentions, social and situational contexts, and likely future actions. This understanding enables robots to provide personalized assistance through actions like monitoring, coaching, and motivation, while adapting both verbal and non-verbal behaviors to individual users. We also address the challenges posed by multi-user scenarios, where robots must assess group dynamics and manage engagement across multiple parties in both public and private environments. The talk will highlight our findings and discuss the technical and interactional challenges of extending personalization to multi-party settings in Human-Robot Interaction.

Lightning Speaker

Isabel Neto

Assistant Professor, University of Lisbon

Fostering Inclusion among Mixed-Ability Children through Social Robots

Children of diverse abilities and backgrounds are increasingly integrated into mainstream classrooms. However, simply placing children with and without disabilities—or migrant and native children—together in mixed-ability settings does not ensure inclusion. Many children with disabilities or from migrant backgrounds still experience exclusion, including limited social interaction and an increased risk of isolation.

Group work has the potential to foster inclusion by making activities more accessible and addressing individual challenges such as shyness, disabilities, or cultural differences. It also helps develop essential social skills, including collaboration, negotiation, and self-regulation.

Social robots have shown promise in enhancing group work and supporting inclusive dynamics. In this talk, I will share our research, and the findings and the challenges we encountered in using social robots within school settings to promote inclusion. I will discuss how a robot’s role, sensors, perceived behavior, and interaction within different group configurations can influence both actual inclusion and children’s perception of it.

Children of diverse abilities and backgrounds are increasingly integrated into mainstream classrooms. However, simply placing children with and without disabilities—or migrant and native children—together in mixed-ability settings does not ensure inclusion. Many children with disabilities or from migrant backgrounds still experience exclusion, including limited social interaction and an increased risk of isolation.

Group work has the potential to foster inclusion by making activities more accessible and addressing individual challenges such as shyness, disabilities, or cultural differences. It also helps develop essential social skills, including collaboration, negotiation, and self-regulation.

Social robots have shown promise in enhancing group work and supporting inclusive dynamics. In this talk, I will share our research, and the findings and the challenges we encountered in using social robots within school settings to promote inclusion. I will discuss how a robot’s role, sensors, perceived behavior, and interaction within different group configurations can influence both actual inclusion and children’s perception of it.

Tutorial

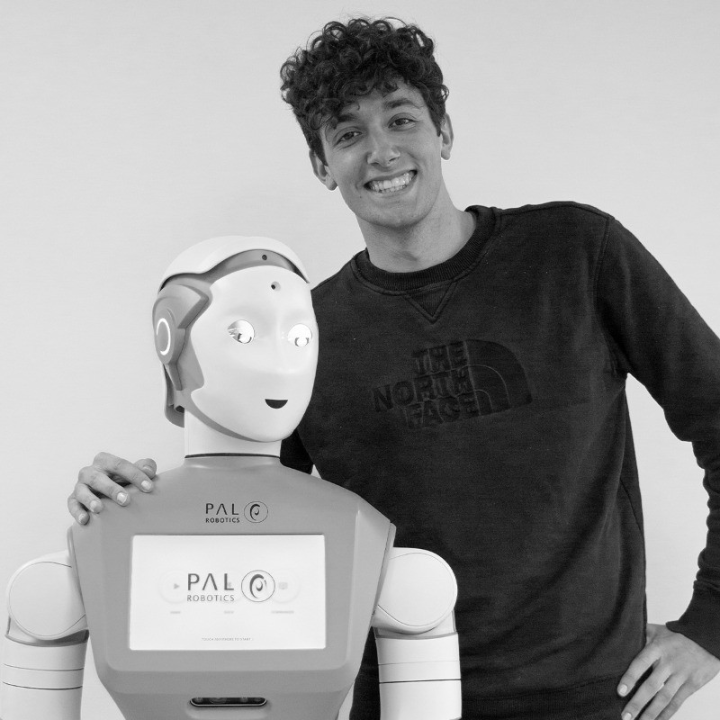

Lorenzo Ferrini

PhD Student, PAL Robotics

ROS4HRI

This tutorial provides an up-to-date overview of the state of the art in using the Robot Operating System (ROS 2) to develop robots with socio-cognitive capabilities. It begins with a brief introduction to the ROS4HRI framework, demonstrating its application in constructing a complete social robot architecture—from human perception to expressive social interaction. The tutorial then illustrates the software integration required to realize an autonomous social robot, combining open-source ROS 2-based social perception modules, a semantic knowledge base, and a Large Language Model. A PAL Robotics TIAGo Head will be used to showcase the system running on real hardware.

This tutorial provides an up-to-date overview of the state of the art in using the Robot Operating System (ROS 2) to develop robots with socio-cognitive capabilities. It begins with a brief introduction to the ROS4HRI framework, demonstrating its application in constructing a complete social robot architecture—from human perception to expressive social interaction. The tutorial then illustrates the software integration required to realize an autonomous social robot, combining open-source ROS 2-based social perception modules, a semantic knowledge base, and a Large Language Model. A PAL Robotics TIAGo Head will be used to showcase the system running on real hardware.

Accepted Papers

Co-Designing SONRIE: Tailoring a Social Robot for Multicultural Groups of Children

Anna Allegra Bixio, Alice Nardelli, Alice Stopponi, Maria Filomia, Alessia Bartolini and Carmine Recchiuto

Co-design is widely used in educational contexts to make stakeholders active participants in the learning process. This study presents the co-design process conducted with teachers, educators, and families prior to introducing a social robot in four highly culturally diverse Italian preschools and one nursery. We consider co-design an essential step, as it allows teachers and educators to tailor the robot’s unique characteristics to effectively support their pedagogical practices while incorporating children’s cultural awareness into the robot’s design. Results highlight the importance of co-creating robotic applications before deployment, for developing a framework tailored to a specific educational context.

The Space Between Us: A Methodological Framework for Researching Bonding and Proxemics in Situated Group-Agent Interactions

Ana Müller and Anja Richert

This paper introduces a multimethod framework for studying spatial and social dynamics in real-world group-agent interactions with socially interactive agents. Drawing on proxemics and bonding theories, the method combines subjective self-reports and objective spatial tracking. Applied in two field studies in a museum (N = 187) with a robot and a virtual agent, the paper addresses the challenges in aligning human perception and behavior. We focus on presenting an open source, scalable, and field-tested toolkit for future studies in this field.

Banner designed by Chahin Mohamed. "Lo-Fi Cyberpunk animated". August, 2021. https://dribbble.com/shots/18947748-Lo-Fi-Cyberpunk-animated